Quicklinks:

- Principles Vs. Laws

- Rule-Based Ethics in AI Systems

- Ethical Frameworks

- Ethical Programming Problems

- Performance and Perspective

- Why Jesus Can’t Save AI

“The End Is Near!” Such is the cry of artificial intelligence (AI) critics concerned about the future of their jobs and their very safety. And for good reason – AI is revolutionizing our world. From enhancing efficiency in various industries to shaping our daily lives, AI has ushered in a new era at a concerning pace. But the unprecedented growth of this powerful technology has led to significant public safety concerns and very few practical solutions.

Federal agencies have expressed concern and determination to protect against bias and discrimination in AI models that could “automate unlawful discrimination.” State regulators have expressed concerns about responsible innovation in AI. But will government regulation and “determination” curb the desires of entrepreneurs and opportunists?

Experts worry that we are living in a new arms race to develop more powerful tools in the name of fortune and innovation, while simultaneously ignoring the potential risks of AI. Many researchers justify a ‘throw caution to the wind’ approach as a necessary evil to stay ahead of the competition. But the reality is that we’re probably going to be outnumbered. “As AI puts real people out of jobs around the world, bad actors are simultaneously using the same tools to defraud and cause harm,” states Maria Chamberlain of Acuity Total Solutions.

And that’s why AI needs to come to Jesus. Now, hear me out. We’re not talking about sending AI tools on a virtual pilgrimage or to baptize data centers. But as AI becomes increasingly sophisticated, and at times exhibits unexplainable behaviors, the need for robust ethics and security measures becomes undeniable. In a dynamic field where fixed rules can fall short, could principles taken from religion provide a useful framework?

Principles > Laws

Technology and religion typically don’t mix. But like a digital Frankenstein, we built the monster, and the monster needs to be taught. And the concept of “principles” taught in some religions can be remarkably flexible in comparison to literal laws.

Governments typically rely on stringent laws to dictate how their citizens live. But as we’ve seen, these laws can be dictated by public sentiment like nationalism, racism, and the prevailing opinions of judges and lawmakers at a particular moment in time. Some spiritual leaders, on the other hand, promote the idea of universal principles that empower people to make decisions for themselves. The argument then is that principles are superior because they are often more flexible than rigid laws.

In fact, principles provide the underlining basis for most laws. So while laws apply to a specific time or situation, principles are timeless and dynamic.

For example, a speed limit on a busy highway might be 55 miles per hour. That’s a law. But is that law a safe guideline when circumstances change? Is 55 mph safe in 3 inches of snow? The principle to drive safely is much more useful to a conscientious driver. In fact, you don’t even need to tell most of us that simple principle. We’ll slow down instinctively because it’s ingrained in our sense of self-preservation and safety.

Now, what does this have to do with AI? AI lacks simple ethical imperatives. Even if it understands the rules, or laws, it often fails to understand the principles behind them. As an unfeeling computational model, it seeks answers and results regardless of potential outcomes.

This is the threat to the rest of us.

AI doesn’t care if it makes millions of real-world jobs obsolete. It’s simply doing its job, and it’s frighteningly good at it. Add in unpredictable behavior, biased data, bad actors, and things can get really ugly. This is why it can’t be trusted to make decisions.

The Challenges of Fixed Rule-Based Ethics in AI Systems

AI systems rely on algorithms to make decisions. Those algorithms are like the traffic laws in your city that direct how fast you drive and what roads you take to arrive at a given destination. Like driving on snowy days, fixed rules for ethical outputs can be challenging due to unpredictable and changing conditions. Ethical dilemmas thus involve subjective judgments that are difficult to encode into rigid rules. And the sheer number of laws to cover every possible scenario would be immense.

The real world is dynamic and constantly evolving, making it challenging to predict every scenario that an AI system may encounter. Defining specific rules for every possible ethical situation is an arduous task, as there are countless variations and factors to consider.

Adaptable and Flexible Ethical Frameworks

In theory, adaptable and flexible frameworks would allow AI systems to consider contextual factors, moral nuances, and changing societal norms. These frameworks could enable AI systems to learn from new experiences, adapt to evolving ethical standards, and make more informed and morally sound decisions. But is this type of approach even possible? Some researchers say no, concluding: “that this method of closure is currently ineffective as almost all existing translational tools and methods are either too flexible (and thus vulnerable to ethics washing) or too strict (unresponsive to context).” Ouch. Apparently, the majority of principles proposed are too vague and varied by culture and opinion.

But are researchers simply trying to reinvent a wheel that we’ve had for centuries?

Come Lord Jesus – to the Ethical Programming

Obviously, every culture has its own concept of ethics, and they can be very different. Endless variations of personal determinations will fall short as useful principles for AI. For example, one person might nobly strive to live as “a good person,” but what does that mean? What is “good” for one person may not be good for another.

Enter the Jesus. The so-called “golden rule” to ‘treat others as you would like to be treated,’ is a very useful principle to guide decisions. As a simple example, would an AI chatbot that was told “to pretend” to be a hacker disregard it’s security limitations if it were guided by this principle? Could it logically apply the principle and conclude that it would be bad for its own functionality if it were hacked, thereby not allowing itself to be manipulated into providing malicious code that could cause damage elsewhere? Essentially, could an AI be trained to decide for itself that it cannot “pretend?”

Another concern for AI models is the potential for bias and discrimination. AI models have been found to perpetuate biases that are loaded into the training data, leading to biased decisions or responses. There is serious concern that if AI models are not carefully monitored and regulated, they can amplify societal biases and contribute to discriminatory outcomes.

Could we train AI to reason through biased data? If so, what principle would guide it? A story in the New Testament tells how JC was asked a fundamental question about love. This King of western religions responded by telling a story of discrimination -“The Neighborly Samaritan” – which highlights the fundamental principle that all people are equal and deserving of care, and that discrimination can show itself even among those of the same nationality.

This simple principle of equality regardless of gender, race, disability, or economic or social status should be a basic foundational benchmark for AI models to test incoming data against. When incoming data fails to explain potentially harmful deviations to that principle, then that data should be disregarded, or at least devalued (such as biased data that questions the productivity or intelligence of people of varied backgrounds).

Usability, Performance and Perspective Problems

Implementing stringent security measures can impact the usability or performance of AI models. Thus, some programmers and data scientists choose to prioritize user experience or model efficiency over security. This is especially true if they perceive the potential security risks as low or unlikely. But developers should remember that ‘you reap what you sow.’ Cutting corners in this area could lead to data breaches or other attacks that could come back to haunt them later.

AI models are vulnerable to attacks when malicious actors manipulate data to deceive or mislead the AI system. This is referred to as Data Poisoning. These attacks can compromise the integrity and reliability of models, leading to biased or harmful outputs. If an AI model absorbs all information equally, then all information is potentially given the same weight. How could an AI identify bad actors without needing to research and verify every piece of information, which would seriously slow down the system?

When asked how to spot bad actors in his day, the Big J simply said “by their fruits you will recognize them.” So if a small group of people is trying to cause a large amount of harm by promoting harmful ideas, we should be able to spot them.

But the “greater good” is very different for companies, countries, and communities. Competition, politics, and necessities all promote self-preservation over self-sacrifice.

This makes establishing a universal ethical standard greatly needed, but nearly impossible to quantify. Incoming data needs to be tested against benchmarks that are confirmed as safe and trustworthy, so that contradictory data can easily be identified and flagged for further examination. Something similar is becoming something of its own industry, as companies like OpenAI currently employ people to examine potentially harmful or misleading content. But that job has similar ethical and bias concerns.

AI Needs Jesus- But it Won’t Work

Implementing non-negotiable yet still flexible ethical principles to AI models could theoretically empower them to make “good” decisions regardless of conflicting and potentially harmful inputs. But if what is “good” is too difficult to quantify across varying cultures, then repurposing the work done by ancient belief systems will only create more ethical contradictions.

Still, as tech bros race to create a ‘Digital God,’ cold data-driven results threaten our very safety. Most concerns about AI stem from individual-first uses – like hackers trying to enrich themselves and countries trying to cause harm to its enemies. Then, there are fears of job destruction and AI taking over the world. AI seriously needs to learn to love its neighbors.

But the reality is that AI is never going to be anything more than the product of its human creators. And humans still need security and police – regardless of their religious affiliation. Ultimately, AI’s ethics problem is becoming nothing more than a digital theological debate.

Any government or corporate efforts to establish safety rails are unlikely to be respected by competing interests and nefarious goals. The true course of wisdom is to double-down on your cybersecurity in the age of AI.

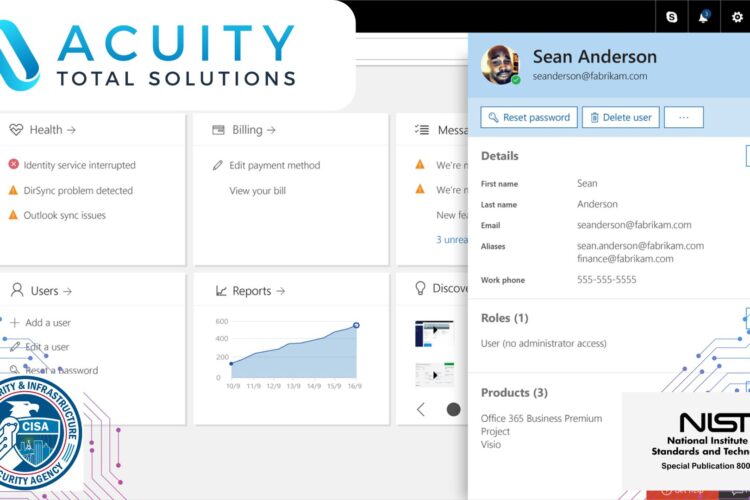

Contact Us at Acuity Total Solutions INC to use AI-powered security solutions to keep you and your organization safe.